Managing Infrastructure with Ansible

A step-by-step guide to setting up an Ansible project for managing infrastructure.

This post details my process of automating server configurations with Ansible, applying specific roles to different node types. I used Vagrant for local testing to ensure consistency, demonstrating a practical approach for DevOps and SRE workflows.

Setting Up the Prerequisites

I installed Python, Ansible, Vagrant, and VirtualBox.

Install Python and Ansible

- Create a virtual environment:

1 2

python3 -m venv ansible devops demo venv source ansible devops demo venv/bin/activate

- Install Ansible:

1

pip install ansible - Verify:

ansible --version.

Install Vagrant and VirtualBox

- Download VirtualBox from virtualbox.org.

- Download Vagrant from vagrantup.com.

- Verify:

vagrant --version.

Configuring Ansible

I created the Ansible structure to define my infrastructure, starting with the configuration file and inventory. I set up four nodes (two web servers, a database, and a monitoring server).

Create ansible.cfg

In ansible.cfg:

1

2

3

4

5

6

7

8

9

10

11

[defaults]

inventory = ./inventories/development

roles_path = ./roles

host_key_checking = False

[privilege_escalation]

become = True

become_method = sudo

become_user = root

become_ask_pass = False

Define the Inventory

In inventories/development/hosts.yml:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

---

all:

children:

webservers:

hosts:

web01:

ansible_host: 127.0.0.1

ansible_port: 2222

ansible_ssh_private_key_file: ""

web02:

ansible_host: 127.0.0.1

ansible_port: 2200

ansible_ssh_private_key_file: ""

databases:

hosts:

db01:

ansible_host: 127.0.0.1

ansible_port: 2201

ansible_ssh_private_key_file: ""

monitoring:

hosts:

monitor01:

ansible_host: 127.0.0.1

ansible_port: 2202

ansible_ssh_private_key_file: ""

vars:

ansible_user: vagrant

vagrant_key_web1: .vagrant/machines/web1/virtualbox/private_key

vagrant_key_web2: .vagrant/machines/web2/virtualbox/private_key

vagrant_key_db01: .vagrant/machines/db01/virtualbox/private_key

vagrant_key_monitor01: .vagrant/machines/monitor01/virtualbox/private_key

Add Group Variables

In inventories/development/group_vars/all.yml, I defined shared variables:

1

2

3

4

---

env: development

app_name: ansible demo

app_version: 1.0.0

Creating Roles and Playbooks

With the inventory and variables defined, I created roles to manage different types of servers and applied them to their respective groups.

Create the Common Role

In roles/common/tasks/main.yml, I added tasks to update packages and install essentials:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

---

- name: Update package cache

ansible.builtin.apt:

update_cache: true

cache_valid_time: 3600

become: true

when: ansible_os_family == "Debian"

- name: Install base packages

ansible.builtin.package:

name:

- vim

- curl

- htop

state: present

become: true

In roles/common/tasks/security.yml, I hardened the servers by enabling a firewall and restricting SSH access:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

---

- name: Install ufw

ansible.builtin.apt:

name: ufw

state: present

become: true

- name: Enable ufw

community.general.ufw:

state: enabled

become: true

- name: Allow SSH

community.general.ufw:

rule: allow

port: 22

proto: tcp

become: true

- name: Disable root login

ansible.builtin.lineinfile:

path: /etc/ssh/sshd_config

regexp: '^PermitRootLogin'

line: 'PermitRootLogin no'

become: true

notify: Restart SSH

- name: Disable password authentication

ansible.builtin.lineinfile:

path: /etc/ssh/sshd_config

regexp: '^PasswordAuthentication'

line: 'PasswordAuthentication no'

become: true

notify: Restart SSH

Disabling root login and password authentication reduces the risk of unauthorized access, a key SRE practice.

In roles/common/handlers/main.yml, I added a handler to restart SSH when needed:

1

2

3

4

5

6

---

- name: Restart SSH

ansible.builtin.service:

name: sshd

state: restarted

become: true

In roles/common/meta/main.yml, I added the following metadata:

1

2

3

4

5

6

7

8

9

10

11

---

galaxy_info:

author: Rick Houser

description: Common server configuration for all nodes

license: MIT

min_ansible_version: "2.9"

platforms:

- name: Ubuntu

versions:

- focal

dependencies: []

Web Server Role

In roles/web_server/tasks/main.yml, I installed Nginx on the web servers:

1

2

3

4

5

6

---

- name: Install nginx

ansible.builtin.package:

name: nginx

state: present

become: true

Database Role

In roles/database/tasks/main.yml, I installed PostgreSQL on the database server:

1

2

3

4

5

6

---

- name: Install postgresql

ansible.builtin.package:

name: postgresql

state: present

become: true

Monitoring Role

In roles/monitoring/tasks/main.yml, I installed monitoring tools on the monitoring node:

1

2

3

4

5

6

---

- name: Install htop

ansible.builtin.package:

name: htop

state: present

become: true

Create a Playbook

In playbooks/site.yml, I applied the roles to their respective groups:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

---

- name: Apply common configuration to all nodes

hosts: all

roles:

- common

- name: Configure web servers

hosts: webservers

roles:

- web_server

- name: Configure database servers

hosts: databases

roles:

- database

- name: Configure monitoring servers

hosts: monitoring

roles:

- monitoring

Managing Secrets with Ansible Vault

I configured Ansible Vault to demonstrate secure management of sensitive data, though it’s not used in this playbook.

Set Up a Vault Password File

1

2

echo "AnsibleDemo2025!" > .vault_pass.txt

chmod 600 .vault_pass.txt

Use a strong password for the vault file and store it securely, as it’s required to decrypt sensitive data.

Setting Up CI with GitHub Actions

I added a GitHub Actions workflow to lint my Ansible code, ensuring quality before applying changes.

Install Linting Tools

1

pip install ansible-lint yamllint

Create the Workflow

In .github/workflows/ci.yml:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

---

name: Ansible Lint

on:

push:

branches: [main]

pull_request:

branches: [main]

jobs:

lint:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.10'

- name: Install dependencies

run: |

python -m pip install --upgrade pip

pip install ansible ansible-lint yamllint

- name: Lint Ansible playbooks

run: |

ansible-lint playbooks/*.yml -x vault

yamllint .

Configure yamllint

In .yamllint:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

---

extends: default

rules:

comments:

min-spaces-from-content: 1

comments-indentation: false

braces:

max-spaces-inside: 1

octal-values:

forbid-implicit-octal: true

forbid-explicit-octal: true

truthy:

ignore: |

.github/workflows/ci.yml

ignore: |

vars/secrets.yml

Testing the Setup

With the roles and playbook ready, I used Vagrant to test the configurations on local VMs, simulating the node structure.

Create a Vagrantfile

In Vagrantfile, I defined four VMs matching the inventory:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

Vagrant.configure("2") do |config|

config.vm.box = "Ubuntu/focal64"

(1..2).each do |i|

config.vm.define "web#{i}" do |web|

web.vm.hostname = "web#{i}"

web.vm.network "private_network", ip: "192.168.56.10#{i}"

end

end

config.vm.define "db01" do |db|

db.vm.hostname = "db01"

db.vm.network "private_network", ip: "192.168.56.103"

end

config.vm.define "monitor01" do |monitor|

monitor.vm.hostname = "monitor01"

monitor.vm.network "private_network", ip: "192.168.56.104"

end

end

Start the VMs and Run the Playbook

I started the VMs and ran the playbook to apply the roles:

1

2

vagrant up

ansible-playbook playbooks/site.yml

Check the playbook output for errors or skipped tasks to confirm all configurations were applied successfully.

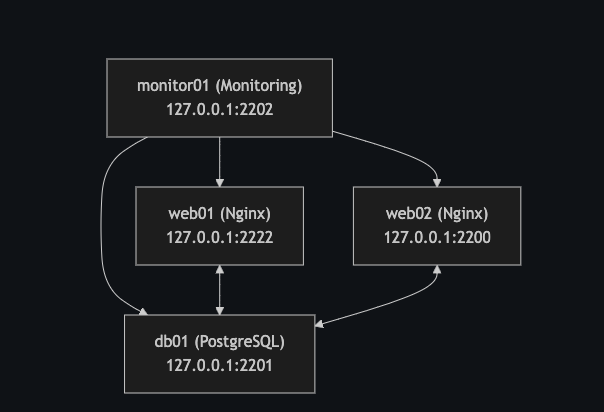

Visualizing the Infrastructure

Below is a diagram illustrating the intended infrastructure design for this project. It shows how the nodes could interact after setting up the necessary connections, such as configuring the web servers to connect to the database and enabling the monitoring node to observe the others.  Diagram of the infrastructure managed by Ansible

Diagram of the infrastructure managed by Ansible

Cleaning Up

I shut down the VMs after testing:

1

vagrant halt

Use

vagrant destroy -fto completely remove the VMs and free up disk space if you no longer need the test environment.

Conclusion

This Ansible project automated server configurations across multiple nodes, applying a common role for basic setups and specific roles to configure web servers with Nginx, a database with PostgreSQL, and a monitoring node with tools like htop. Testing with Vagrant and linting with GitHub Actions added reliability, aligning with DevOps and SRE practices for infrastructure management.